The metaverse is about to get a boost in extra-sensory perception, as researchers at Carnegie Mellon University are adding special technology to a Quest 2 headset to give users more sensory experiences. In a collaboration between the University’s Human-Computer Interaction Institute and the Future Interfaces Group, the team of scientists has developed a technique using ultrasound to stimulate a user’s lips, making them feel a “kissing” or “drinking” sensation. According to a researcher at Carnegie Mellon’s Robotics Institute, Vivian Shen, if a user interacts with a virtual water fountain, their mouth can “feel” it. “Every time you lean down and think you should be feeling the water, all of the sudden, you feel a stream of water across your lips. It’s cool. It makes the experience much more immersive.”

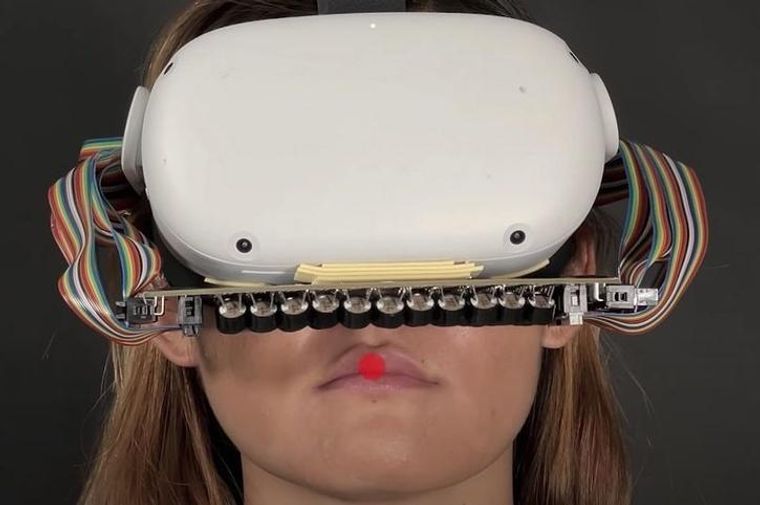

The technology works by adding a series of ultrasonic transducers to a Quest 2 headset, produced by Meta (previously Facebook). Because the mouth is quite sensitive, these transducers emit ultrasound at the mouth to create sensations. While previous headsets didn’t include mouthparts, probably to avoid looking clunky, the researchers believe that mouth simulation adds greatly to the virtual reality experience. The transducers also work in tandem with each other to send the waves in a uniform motion. “If you time the firing of the transducer just right, you can get them to constructively interfere at one point in space,” Shen added. With 64 of these transducers set just above the mouth, scientists can easily target the lips and tongue with ultrasound.

Most individuals are familiar with ultrasound for medical imaging, such as in pregnancy or injuries. These soundwaves, not heard by human ears, penetrate skin and tissue to create an image. The researchers at Carnegie Mellon University used a similar ultrasound to target the mouth, and even hands to create sensory feelings. Experiencing the ultrasound on their hands, users think that they could push virtual objects, such as buttons. Unfortunately, the mouth and hands are really the only areas where this stimulation can be achieved. According to Shen: “You can’t really feel it elsewhere; our forearms, our torso-those areas lack enough of the nerve mechanoreceptors you need to feel the sensation.”

Ultrasound in the Metaverse

To test their new headset, the researchers evaluated 16 individuals trying out their technology. The sensory effects included swipes, vibrations, and point pulses directed at the mouth and hands. One user explained that without these effects, “It was difficult to tell when things were supposed to touch my face.” The researchers found that the mouth-targeted effects, such as teeth-brushing or feeling a bug walk across the lips, were more successful than the effects targeting the hands. Some effects could also be a little disorienting, such as drinking from the virtual water fountain. “It’s weird because you feel the water but it’s not wet,” Shen explained.

While their initial tests were a success, Shen and the team know they have a long way to go before this technology is more widely adopted. But the team is hopeful the team will get there soon. “Our phased array strikes the balance between being really expressive and affordable,” Shen added. For those using the metaverse as an escape or a place to experiment with sexuality or romance, this device could be huge. The implications of his new technology could be significant for the metaverse industry, where sensory experiences are limited to sight and sound only. From kissing to smoking, to blowing up balloons, this technology could add another layer to this virtual environment, drawing more individuals in and making users feel more connected than ever.

If you found this article to be informative, you can explore more current Digital Twin news here exclusives, interviews, and podcasts.